FOMO No More: How Model Context Protocol is Supercharging AI Capabilities

I've spent the last month deep-diving into Model Context Protocol (MCP) so you don't have to. Between wading through GitHub repos, testing implementations, and reading every piece of documentation I could find, I've developed strong opinions on whether this is worth your time.

The AI landscape keeps evolving at a maddening pace. Yesterday it was a plain conversational interface, today it's function calling and agents, tomorrow who knows? The result: developers constantly rebuilding the same core functionality just to handle the latest AI feature.

MCP cuts through this chaos with a refreshingly pragmatic approach. It's not introducing yet another AI capability, it's standardizing how all AI capabilities connect to the real world. Think of it as function calling on asteroids.

Why MCP Actually Matters

The whole point of MCP is solving the fragmentation problem. It's not trying to replace function calling or agents or any other extension approach. It's creating a universal standard that can accommodate all of them.

Think about it like this: before USB, connecting things to computers was chaos. Every device needed its own proprietary connector, and nothing worked with anything else. The MCP developers themselves often use this exact USB-C analogy when describing their vision. They're quite literally doing for AI what USB did for hardware, creating a standard interface that just works across the entire ecosystem.

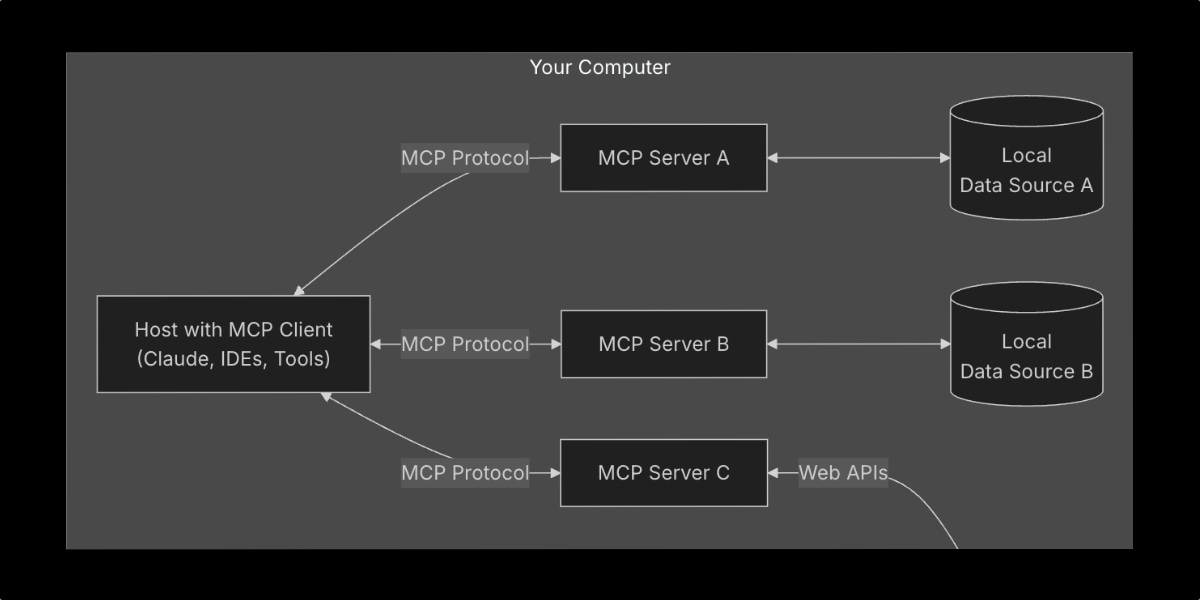

When an LLM needs real-time information or specialized capabilities, MCP creates a consistent way to request and receive that data through a client-server architecture. The model doesn't need to know the specifics of how each capability works under the hood. It just needs to follow the protocol.

What's amazing is how simple yet powerful this approach is. The LLM gets continuously updated context and maintains a persistent connection with real-world capabilities, but without the usual headache of completely different implementation approaches for each vendor.

Will Models Get Better at Using MCP?

Current LLMs are already getting fine-tuned for function calling. Claude, GPT-4, and others are increasingly good at it. But there's a strong case that models will get even better at MCP specifically.

This excellent work on fine-tuning Llama 3.1 shows how even smaller models can be optimized for structured function calling. The same techniques could make models significantly better at MCP interactions, especially since MCP has such a well-defined interface.

If you're curious which current models might work best with MCP, check out the Gorilla function calling leaderboard. These rankings give a decent proxy for which models will likely excel at MCP. This is because MCP essentially builds upon and standardizes function calling, models that already understand how to properly invoke functions are well-positioned to leverage MCP's capabilities. The key difference is that while function calling is typically static, MCP provides LLMs with up-to-date context about available tools and their capabilities in real-time thanks to the 1:1 connection between the MCP client and the MCP server. This dynamic context window allows models to make more informed decisions about which tools to use and how to use them effectively, eliminating the need to hardcode function schemas directly into model weights.

Surprisingly Broad Industry Support

I was skeptical when I first heard Anthropic created MCP. The last thing we need is another proprietary standard from a single AI company. But the adoption has been genuinely surprising.

OpenAI, Anthropic's main competitor, recently added MCP support to their Python SDK. When direct competitors start adopting each other's standards, something interesting is happening.

Zapier's aggressive move into MCP is another strong signal. They're positioning MCP as the gateway between AI and their thousands of app integrations. For anyone curious about what's possible, the Awesome MCP Servers repository has become the go-to resource.

MCP Invades the Apple Ecosystem

The Apple ecosystem is notoriously insular, so seeing rapid MCP adoption there is particularly telling. There are already several fascinating implementations:

The iOS Simulator MCP transforms iOS testing by turning LLMs into automated QA agents. Instead of manually validating each UI element post-development, developers can prompt the AI to "verify all accessibility elements," "enter text and confirm inputs," or "validate swipe actions". All without writing a single test script. It can even take screenshots and record interaction videos as documentation. Imagine how much time can be saved by letting the AI run through standard validation flows immediately after implementing features, catching subtle UI inconsistencies that would have otherwise shipped to production, or just caught by manual testing.

For those of us constantly switching between Xcode and Cursor, the XcodeBuild MCP is a huge time-saver. Building and running iOS apps directly from Cursor without constantly hopping back to Xcode while an AI deals with the complexity for us feels like the future.

For teams using Tuist, they've just added MCP support too. Their implementation exposes project dependency graphs and configuration details, letting you ask questions about architecture or get help troubleshooting build issues directly from your AI assistant. Check out their docs for more details.

The MCP's Swift SDK is the official Swift implementation of the Model Context Protocol, enabling developers to create custom MCP clients and servers natively in the Apple ecosystem. Supporting everything from iOS to macOS and even visionOS, it provides a comprehensive API for working with tools, resources, and prompts within the MCP specification. What's particularly impressive is how it leverages Swift's strong type system and async/await pattern to make MCP interactions feel natural to Swift developers.

Powered by the Swift SDK, iMCP is a macOS Productivity Suite MCP created by the same developers behind the Swift SDK. It enables AI systems to interact with core macOS applications through a unified protocol. With its ability to access contacts, calendar, and weather data, users can issue complex multi-system requests like "schedule lunch with Dave next Friday if it's not raining" - and iMCP will handle checking weather forecasts and creating calendar entries across multiple applications without requiring any additional coding or API access setup.

What really caught my attention was seeing Mattt, the iOS community's favorite hipster, putting his weight behind both iMCP and the Swift SDK. When someone who's shaped so much of the Apple development ecosystem jumps on board this early, it feels like a strong sign we're onto something genuinely valuable here, not just another AI hype.

In fact, you should read Mattt's article on MCP as he goes much deeper than I can here, offering the kind of technical insight that only comes from someone who's not just exploring it but actively building useful MCP servers and the Swift infrastructure so more people can do the same.

So What Now?

MCP feels different from the usual AI hype cycle. It's solving a real problem developers face daily: how to extend LLMs with real-world capabilities in a consistent way.

The broad adoption across competitors, the rapid integration into specialized ecosystems like Apple's, and the focus on standardization rather than reinvention all point to MCP having serious staying power.

I'm curious, what would you build with MCP? Do you think this will become the definitive standard for AI extensions, or will we see competing approaches? Drop your thoughts on Twitter @filipealva and let me know if you're as cautiously optimistic as I am.

With MCP, I'm finally seeing AI solidly moving into an agentic approach that can seamlessly integrate with the tools and applications we already use every day. This isn't just about making AI smarter, it's about making our existing software more powerful by giving AI models a standardized way to work with them. And this is just getting started.